A highly regulated organization proved that AI can deliver fast, measurable value when it starts with trusted metadata.

- They began with a task teams hated: tagging group photos

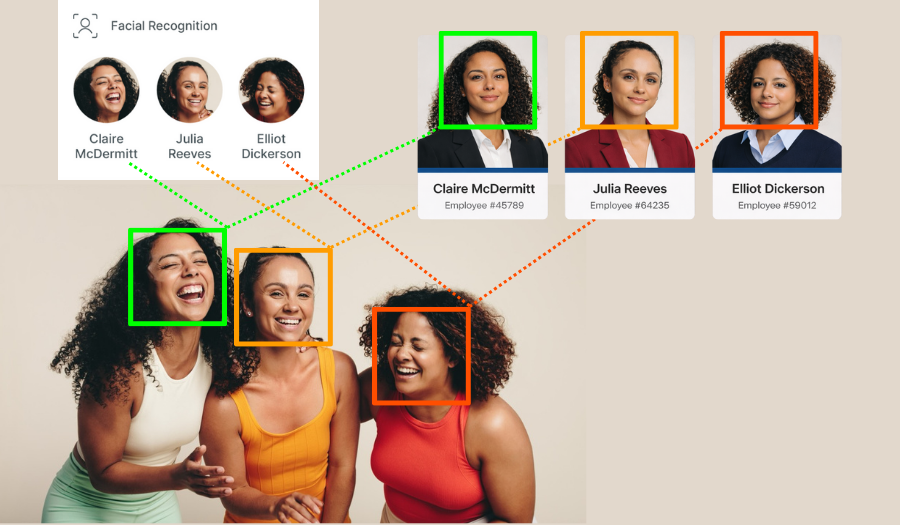

- They trained facial recognition using existing, well-governed portrait metadata

- Automation focused on new content, not historical cleanup

- Clear guardrails limit risk in a regulated environment

The result: a 70% reduction in tagging time and rapid adoption driven by visible, measurable results, not hype.

Most organizations are cautious about AI, especially in regulated environments. Will it work? Will it introduce risk? And if it does work, what does that mean for the people doing the work today?

A large, highly regulated organization proved something many teams still doubt: AI can deliver real value, quickly. Instead of starting with a sweeping AI roadmap or a long list of promised outcomes, they called a meeting.

Instead of leading with AI features, this team led with empathy. They asked a simple question of their team: What is the task you hate the most? The answer was immediate and unanimous. Tagging group photos.

Group photos were slow to process, hard to label accurately, and rarely arrived with names. The work was repetitive, detail-heavy, and time-consuming. It was also essential. If tags were wrong or missing, downstream teams could not find or reuse the content with confidence.

That made group photos the ideal first AI use case. The pain was real. The impact would be visible. And success could be accurately measured.

Start where the metadata is already strong

The real accelerator was not AI alone. It was the data.

This organization already managed a large, well-governed collection of employee portraits. Each portrait carried consistent metadata, including a unique identifier tied to the individual. Employees refreshed portraits on a regular cadence, which kept the dataset current and reliable.

Instead of treating AI as something that required a new data strategy, the team anchored facial recognition to what already worked. The existing portrait metadata became the training set. No reinvention. No parallel system. Just a smarter use of trusted information.

By mapping facial recognition to established identifiers rather than names alone, the team trained the system quickly and with confidence. What might have taken months in another environment took days.

Automate carefully, then measure everything

Once facial recognition was in place for new content, the team took the next step. They automated training across roughly 90,000 active portraits.

The automation ran over a weekend. Cleanup took a few hours, mostly to resolve duplicate tags created during the transition from manual to automated processes. That small detail matters. It shows the difference between a dataset that is “perfect” and one that is governed enough to scale.

The team measured everything. Publication timelines, processing time, and downstream impact were tracked consistently. Within six months, they saw a roughly 70 percent reduction in the time required to publish group photos.

This was not an abstract efficiency gain. It showed up immediately in day-to-day work.

Change management made the difference

Technology alone did not create adoption. Change management did.

The team used a structured framework to introduce AI gradually. First came awareness. Then education. Only after that did they focus on ability and reinforcement.

They were direct about concerns, especially the fear that AI might replace jobs. Instead of dismissing those fears, they let the results speak. The same staff who processed portraits every day were the first to embrace facial recognition once they saw how much manual effort it removed.

The turning point came when accuracy reached a consistent threshold. Once most faces were correctly identified without manual intervention, skepticism gave way to trust. AI was no longer theoretical. It was useful, and the team immediately began to look for other ways to implement AI into other workflows.

Guardrails matter in regulated environments

Equally important was what this organization chose not to automate.

Facial recognition was limited to employees. The team did not apply it to donors or other non-employees, where consent, authorization, or regional regulations could introduce risk. They also avoided retroactively processing historical group photos, focusing instead on new content moving forward.

This discipline mattered. In regulated environments, AI success depends as much on restraint as it does on innovation. Teams responsible for rights, consent, and governance should shape AI scope from day one.

Build momentum, then expand

With facial recognition delivering clear returns, the team began exploring next steps. These included automating confidence scoring so high-confidence matches could move forward automatically, while uncertain cases were flagged for review.

They also explored generative AI derivatives for simple, controlled edits, paired with content provenance standards to ensure transparency as assets moved through delivery channels.

Each new use case followed the same rule: clear value, clear guardrails, and measurable impact.

The pattern any organization can follow

This story is not about facial recognition. It is about approach.

If you want quick wins with AI inside DAM, the path is practical:

- Start with the task your team dreads the most

- Anchor AI to metadata you already trust

- Automate new work before revisiting the past

- Measure results and share them openly

- Use governance to define boundaries, not block progress

AI adoption does not start with ambition. It starts with relief.

When teams see AI remove friction from real work, skepticism fades. Trust builds. And momentum follows. To learn more about how Orange Logic can help your team harness the power of your existing metadata to train AI, schedule all call.

Publisher: Source link